Investing in Atom Computing

Backstory of our Seed stage lead investment into Atom Computing

In 1969, Venrock was one of the first investors in a crazy, misfit group of inventors building a company to reinvent ‘computers’. They named it Intel and what ensued kicked off the most innovative period in modern history. Fast forward to today, we are excited to announce our investment into Atom Computing, the most tractable and pragmatic approach to building a scalable Quantum Computer. This is a completely and radically different paradigm in computing that could define the next 50 years of global innovation.

The foundation of the Quantum Computer was introduced broadly by Nobel laureate Richard Feynman, who believed that because quantum computers would use quantum physics to emulate the physical world, we could solve problems that classical computers would never have the power to tackle. Quantum Computing uses the laws of quantum physics to synthetically entangle atoms into a quantum state for long enough to use them to run computations. Unlike a classical computer that use physical transistors and logic gates to represent a binary 0 or 1 output in a sequential architecture, a quantum bit, known as a qubit, can represent a 0 or 1 at the same time, and operate all possible permutations of a calculation simultaneously.

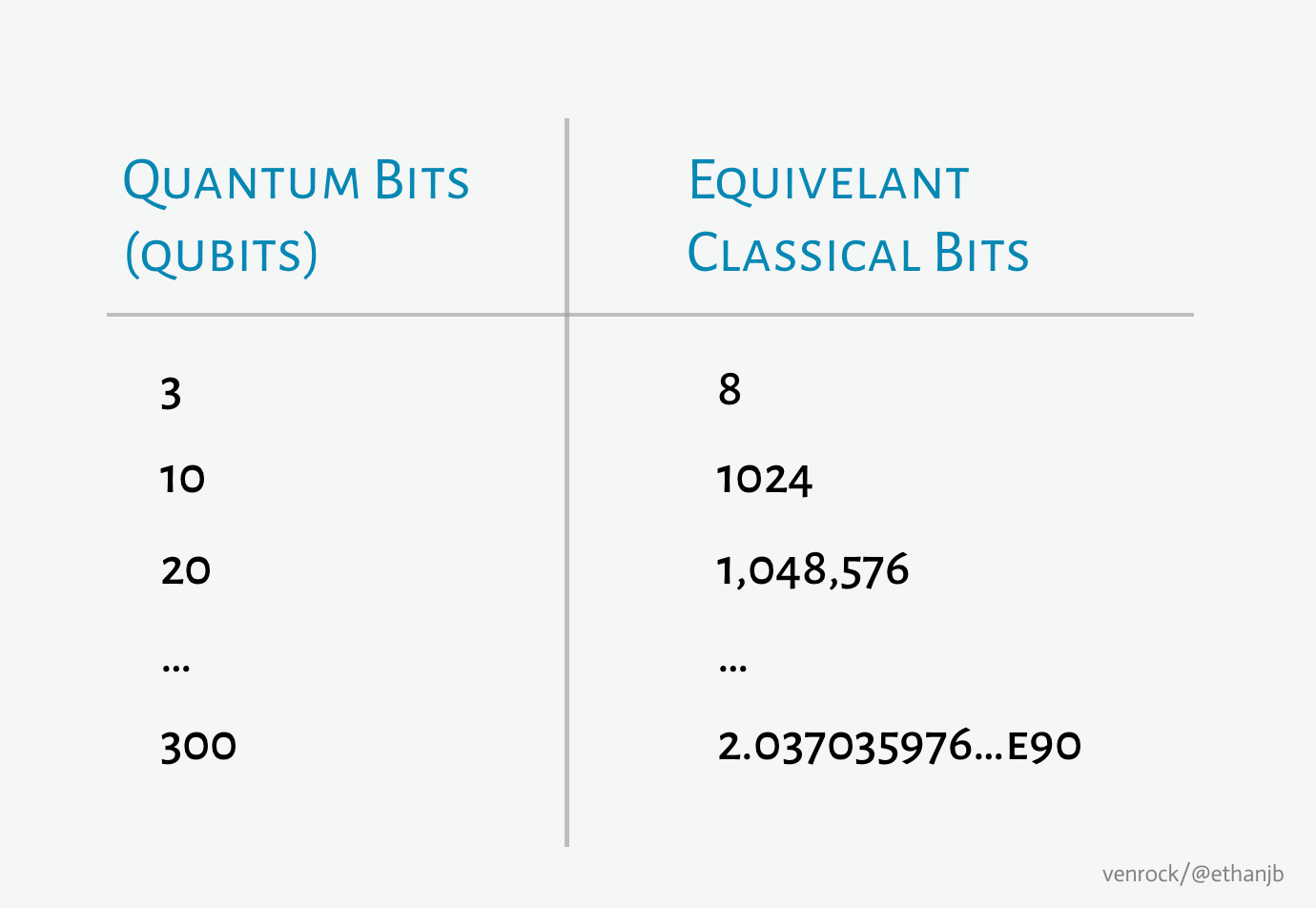

To put the difference into perspective, let’s compare the power of a single bit. In a classical computer, adding a single bit provides an incremental gain in power, going from 8 bits to 9 bits will incrementally improve processing power by 1 bit. In a quantum computer, adding a single qubit doubles the total power of the quantum computer (2^n factor), e.g. 3 qubits = 8 classical bits, 10 qubits = 1024 classical bits, 20 qubits = 1M classical bits, and 300 qubits = more classical bits than atoms in the known universe (the number is 91 digits long). A 300 qubit quantum computer may have more compute power than all supercomputers on earth, combined.

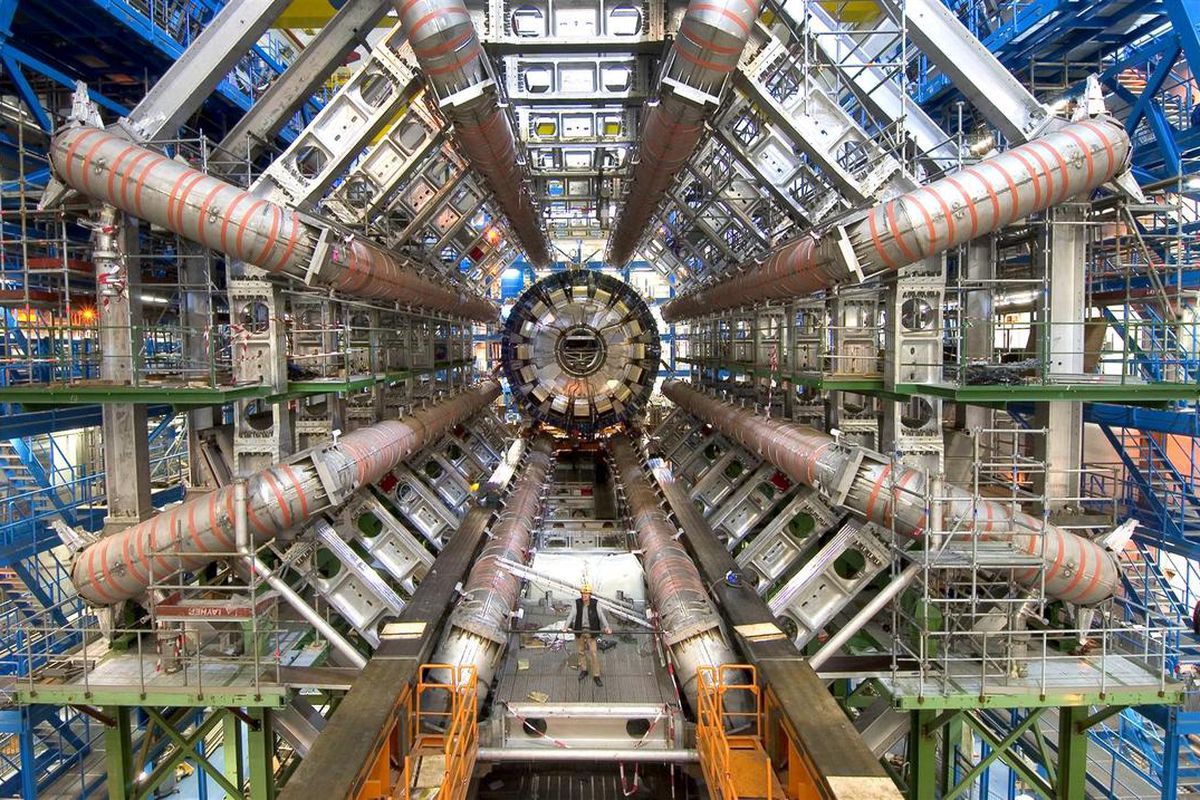

The promise of Quantum Computers will unlock a new dimension of computing capabilities that are not possible today with classical computers, regardless of the size, compute power, or parallelization. We will unlock new insights into our universe (the large hadron collider at CERN captures 5 trillion bits of data, per second), enable high fidelity simulations of weather patterns that will allow us to precisely predict and understand developing hurricanes weeks earlier, and unlock new capabilities in deep learning and AI, allowing for more creative and complex modeling, with less known data across autonomous cars, image recognition, and complex decision making.

With such a transformational technology theoretically feasible, we spent the last few years investigating how far we have progressed on the scientific and theoretical discovery curve: What were the emerging approaches? What would be the challenges to scale? Who would be the most likely team to lead us to a truly tractable approach? We went deep into the various approaches, from superconducting Josephson junctions to ion traps, and spinning electrons, we racked our minds with the wonders of what the world could look like if these attempts could become realities.

The sober reality was that we were still in the invention stage, the equivalence of the pre-transistor, vacuum tube ENIAC digital computer. They were unreliable, couldn’t maintain computational states, and were very inefficient. Teams were trying to invent the technological equivalent of the transistor and integrated circuit to solve the reliability and scale problem. And as we saw in the semiconductor industry in the 1960s, the first team to solve this would become the market makers for the technology.

The ENIAC, one of the earliest electronic general-purpose computers built, was limited by the physical resources and wires required for every additional bit of computing power

Investment Thesis

Understanding that we were still in the theoretical discovery phase helped us develop a clear thesis as to what we thought it would take to become a tractable problem, and came up with the following criteria:

1. An architectural approach that could scale to a large number of individually controlled and maintained qubits.

2. Could demonstrate long coherence times in order to maintain and feedback on the quantum entangled state for long enough to complete calculations.

3. Designed to have limited sensitivities to environmental noise and thus simplify the problem of maintaining quantum states.

4. Could scale up the number of qubits without needing to scale up the physical resources (i.e. cold lines, physical wires, isolation methods) required to control every incremental qubit.

5. Could scale up qubits in both proximity and volume in a single architecture to eventually support a million qubits.

6. Could scale up without requiring new manufacturing techniques, material fabrication, or yet to be invented technologies.

7. Lastly, the system could be error corrected at scale with a path to sufficient fault tolerance in order to sustain logical qubits to compute and validate the outputs.

Without solving all of the above, the problem would still be one of scientific exploration and invention, not a tractable engineering problem.

That’s until we met Ben and the Atom Computing team.

Turning Quantum Computing into a tractable engineering problem

As one of the world’s leading researchers in atomic clocks and neutral atoms, Ben built the world’s fastest atomic clock, and at the time of publication, considered the most precise and accurate measurement ever performed. Through that effort and his Ph.D., Ben showed that neutral atoms could be more scalable, and that it is possible, to build a stable solution to create and maintain controlled quantum states. He used his expertise to lead efforts at Intel on their 10nm semiconductor chip, and then to lead research and development of the first cloud-accessible quantum computer at Rigetti.

Ben soon realized that neutral atoms could be a more attractive building block to create a large-scale quantum system, and left to start Atom Computing.

As we went deep into Ben and team’s approach, we found the most pragmatic and tractable approach to building and scaling a quantum computer to eventually millions of qubits. An approach that did not rely on new fabrication or materials, was built on 40+ years of research and the principles behind it (1)(2)(3)(4)(5), and connected by a deep history and expertise in the secret sauce designed by the team. As a result, a system designed around wireless qubits, with long coherent times, and significant individual control at scale, could meaningfully scale to over a million qubits, and deliver on the real promise of an error-corrected quantum system.

What we realized was Ben and team turned what we had widely believed to be a pursuit of scientific discovery, into potentially a tractable engineering problem. In classical Silicon Valley parlance, every technology we build we want to ‘change the world.’ However, when it comes to quantum, this can reshape our world.

As a result, we had the privilege of leading their seed round in August and couldn’t be more excited to join and support Ben and team in this audacious journey to bringing a true, error-corrected, long coherence time, scalable quantum computer to the masses. Creating a new paradigm for computational power is a world-changing idea, and much like the qubits we’ll build, we couldn’t be more excited* to join him in this mission.