Atom Computing’s Series B

Congrats to the Atom Computing team on their $60M Series B.

Since announcing Atom Computing’s seed round in 2018, we thought it would be fun to follow up on the original thesis to our investment compared to where we are today.

Looking out to the future of Quantum Computing

We’ve long been believers in the near-term reality of quantum computing. The promise of quantum computers will unlock a new dimension of computing capabilities that are not possible today with classical computers, regardless of the size, compute power, or parallelization.

We wrote in 2020 about the progress to :

- Setting the stage for a commercial era of quantum computers and

- Drivers accelerating the age of commercial-ready quantum computing

From risk analysis, monte carlo simulations, determining chemical ground states, to dynamic simulations, FeMoCo, image/pattern recognition, and more, fundamental questions with economic impacts in the tens to hundreds of billions of dollars will be unlocked thanks to quantum computing.

Our original hypothesis into QC came from the belief that regardless of Moore’s law, classical computers are fundamentally limited by a sequential processing architecture. Even with breakthroughs in SoC’s, 3D integrated memory, optical interconnects, ferroelectrics, ASIC’s, beyond CMOS, and more, sequential processing means ‘time’ is the uncontrollable constraint.

For complex, polynomial, or combinatorial problem sets, with answers that are probabilistic in nature, sequential processing is just not a feasible approach due to the sheer amount of time it would take to process. Where quantum computing, even with a few hundreds of qubits, can begin to offer transformational capabilities.

But the challenges in quantum computing had traditionally stemmed from lack of individual qubit control, sensitivity to environmental noise, limited coherence times, limited total qubit volume, overbearing physical resource requirements, and limited error correction. All required precursors to bringing quantum computing into a real-world domain.

Thesis for long term Quantum Computing

This understanding helped us develop a clear thesis to what a scalable architecture would need to look like when evaluating potential investments (re-pasted from our original seed post):

- An architectural approach that could scale to a large number of individually controlled and maintained qubits.

- Could demonstrate long coherence times in order to maintain and feedback on the quantum entangled state for long enough to complete calculations.

- Designed to have limited sensitivities to environmental noise and thus simplify the problem of maintaining quantum states.

- Could scale up the number of qubits without needing to scale up the physical resources (i.e. cold lines, physical wires, isolation methods) required to control every incremental qubit.

- Could scale-up qubits in both proximity and volume in a single architecture to eventually support a million qubits.

- Could scale-up without requiring new manufacturing techniques, material fabrication, or yet to be invented technologies.

- The system could be error corrected at scale with a path to sufficient fault tolerance in order to sustain logical qubits to compute and validate the outputs.

We originally led Atom Computing’s seed in 2018 based on the belief that we were backing the world-class team, and that the architectural approach on neutral atoms would be the right building block for building a scalable system.

Three Years Later…

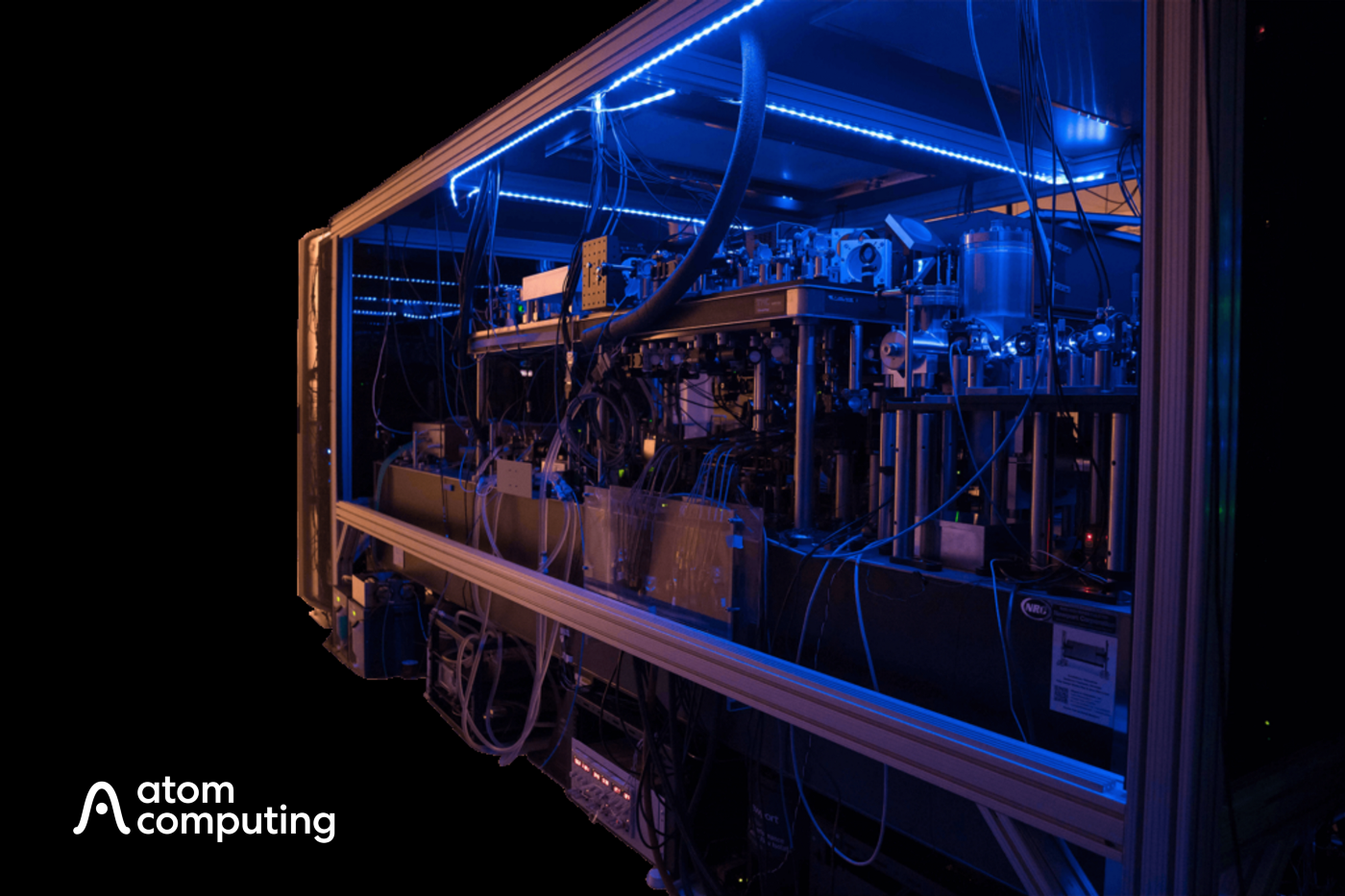

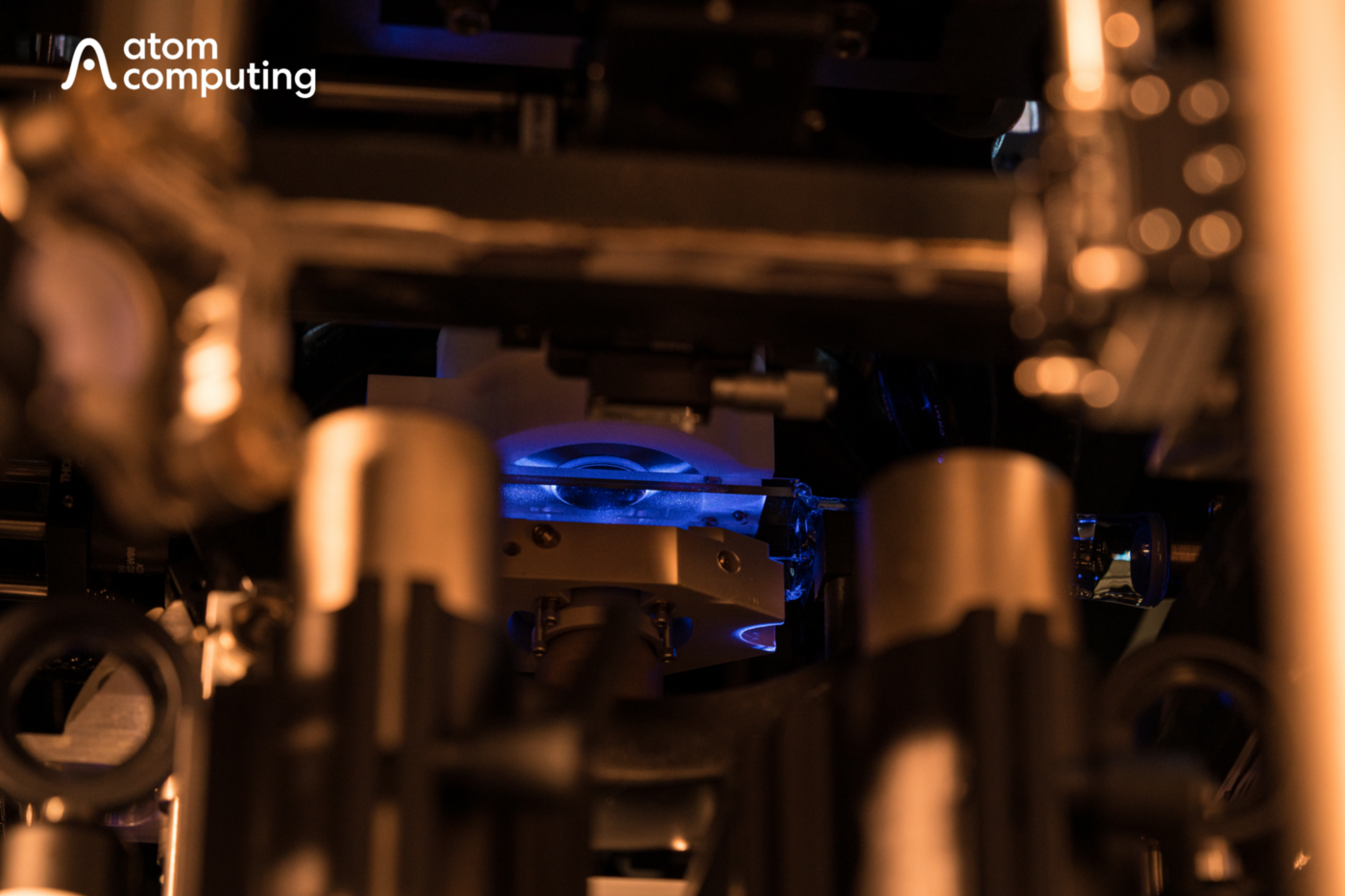

Atom Computing is the first to build nuclear spin qubits out of optically-trapped atoms. Demonstrating the fastest time to 100 qubits ever, in under 2 years since founding, still a high watermark for the QC industry in qubits and time.

They demonstrated the longest ever coherence time for a quantum computer with multiple qubits, at over 40s of qubit coherence time, compared to the next best only in milliseconds and microseconds. Cementing that neutral atoms produce the highest quality qubits; with higher fidelity, longer coherence time, and ability to execute independent gates in parallel compared to any other approach. Long coherence is a critical precursor to error correction and eventual fault tolerance.

They demonstrated scaling up to 100 qubits wirelessly controlled in a free space of less than 40μm. No physical lines or resources for each qubit, no dilution chambers, or isolation requirements. In the coming years they’ll show over 100,000 qubits in the same space as a single qubit in a superconducting approach.

And they proved they could recruit a world-class team of executives to bring the Atom Computing platform to the market, as Rob Hays joined as our CEO from Lenovo & Intel, Denise Ruffner joined as our Chief Business Officer from IonQ and IBM Quantum, and Justin Ging joined as our Chief Product Officer from Honeywell Quantum.

They proved they could build a machine with a large number of individually controlled and maintained atoms. With the longest coherence time on record. Without the need for complex isolation chambers or resources. Could scale up quickly and densely. And required no new manufacturing, materials, or physics.

As the team begins to bring forward the Atom Computing platform with our second-generation system to run breakthrough commercial use-cases, the next three years will be even more exciting.

We couldn’t be more proud of the team and what they’ve accomplished. The last three years have demonstrated a lot of first in the quantum computing industry. Can’t wait to share what happens in the next 3 years.